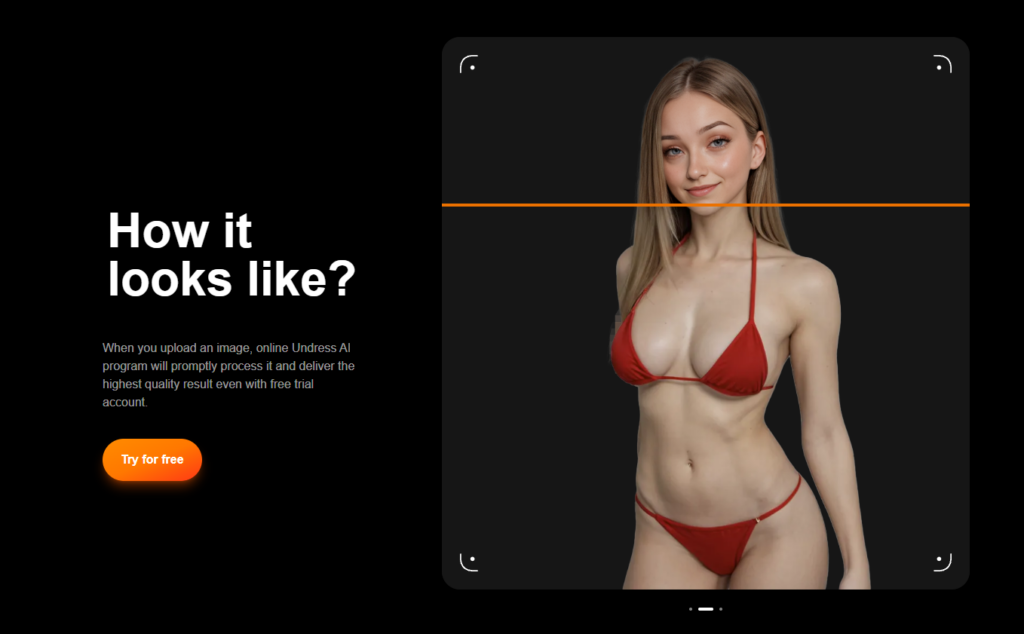

From Fun to Controversy: Public Perception of Adult AI Tools Like Deepnude AI

Adult-focused AI technologies transitioned from obscure novelty to a widespread discussion topic in an unexpectedly brief period. Pioneers regarded them as an amusing gadget, a fresh segment of creative software that seemed daring yet innocuous. This perspective didn’t last. With rising recognition, societal dialogue pivoted to issues of agreement, confidentiality, and how effortlessly a person’s image might be converted into pornographic content without approval. Consequently, opinions divide: certain folks consider adult AI a progression of virtual imagination and customization, whereas others regard it as a dangerous field that heightens bullying and abuse.

Initial Responses to Adult AI and the Lure of Innovation

Adult AI gained visibility amid the larger excitement surrounding picture creation and tailoring. Early fascination frequently stemmed from trial-and-error instead of malicious aims. Individuals experimented with model capabilities, contrasted result formats, and exchanged feedback in tech forums where “fresh functionality” is typically seen as reason enough.

A key element in initial uptake was the sensed separation from actual impacts. When material appeared artificial, participants presumed it fit alongside erotic drawings or imaginative playacting. This notion collapses once systems handle familiar visages, authentic snapshots, or readily recognizable persons. As authenticity and identifiability grow, moral implications escalate quickly.

Namelessness played a role, too. Numerous adult AI applications surfaced in settings allowing boundary-pushing minus linking to personal details. This diminished interpersonal barriers and accelerated trials. It likewise lessened responsibility, which subsequently turned key in societal backlash.

How “Deepnude AI” Turned into a Societal Trigger

The term deepnude ai now commonly serves as a symbol for wider worries: AI setups capable of generating erotic visuals linked to actual individuals. The communal transition from “odd tech gimmick” to “cultural issue” occurred while accounts circulated regarding abuse, embarrassment, and focused intimidation.

Societal discussion escalated since the damage route is direct. It demands no expert abilities, and spreading is simple after material is made. This fosters a view that adult AI isn’t merely an additional media type. It acts as a booster for secrecy breaches.

Multiple elements drove views toward dispute

- Heightened authenticity rendered artificial creations tougher to reject as “clearly phony.”

- Online platforms allowed quick, extensive broadcasting.

- Victims frequently endured psychological damage despite proof of fabrication.

- Complaint and removal methods proved unreliable and sluggish.

- Judicial and guideline reactions trailed usage and abuse trends.

After these patterns emerged, adult AI ceased being viewed solely as tech. It started being viewed as a oversight challenge – who gets shielded, who remains vulnerable, and what solutions apply if injury happens.

Moral Issues Influencing Societal Views

The agreement forms the central matter. Communal approval plummets sharply if systems produce erotic material using someone’s resemblance without explicit consent. Despite the absence of bodily interaction, the status and mental effects may prove intense. Plenty of folks perceive it as sexual infringement since it entails eroticized depiction without agreement.

Confidentiality marks the next stress area. Adult AI may transform everyday pictures into critical material. This alters views on posting visuals digitally, particularly for outward-oriented people, makers, and those susceptible to pursuit or bullying.

A justice aspect exists too. Protection duties commonly land on the victim, not the maker. Sufferers must demonstrate fabrication, seek deletions, and handle communal consequences. Such disparity nurtures societal skepticism and strengthens demands for oversight.

Lastly, adult AI poses a trustworthiness issue. If pornographic pictures get manufactured massively, authentic and bogus material alike turn into tools. Victims could face disbelief while disclosing true mistreatment, and offenders might assert “it’s AI” when facing actual misdeeds. This ambiguity heightens communal worry and solidifies stances against the whole sector.

Oversight, Site Rules, and Communal Reaction

Societal views get molded by institutional handling, too. When sites handle artificial erotic material as a major damage class, participants might feel secure. When the application varies, communal confidence declines.

Oversight bodies advanced irregularly. Certain areas broaden statutes on private picture mistreatment and fakes, as others depend on dated bullying or slander structures unfit for artificial content. Where statutes apply, international application proves tough, and sufferers might find it hard to pinpoint nameless makers.

Site rules emerged as a primary defense. Digital communities and storage services progressively ban unwilling erotic material, covering artificial kinds. The difficulty lies in spotting and applying at volume. Machine sieves might overlook versions or wrongly label material. Manual checks are tardy and may distress reviewers.

Communal reaction pivoted to avoidance and awareness. Folks are adopting marks on private snapshots, restricting sharp public visuals, and employing aids for tracking abuse. Such measures fall short, yet they show a larger shift: communal anticipation heads to forward-thinking security over aftermath fixing.

Reasons Societal Views Continue Evolving

Adult AI resides where two truths meet. One involves valid, mature articulation and dream material, avoiding harm to real persons. The alternative comprises instruments that can be abused for shame, bullying, and pressure. Societal views fluctuate based on which truth leads the dialogue.

As tech advances, the sector gains prominence, and dangers become easier to convey. This generally shifts views to wariness. Concurrently, sharper standards might develop. Folks back mature advancements quicker if the agreement is clear, personal abuse is prevented, and the application holds weight.

The extended path probably hinges on protections over ability. If agreement, responsibility, and solid fixes count as essential elements – not extras – adult AI eases into talks minus prompt opposition. Lacking such barriers, dispute remains the standard reply, as misuse expenses burden the most at-risk individuals.